OpenAI’s annual developer conference, DevDay 2025, took place on October 6, 2025, in San Francisco. The event showcased a series of exciting announcements and updates that are set to enhance the capabilities of developers and businesses using OpenAI’s technologies. OpenAI’s CEO, Sam Altman, along with other company personalities, presented a range of new features and tools designed to empower developers to build more advanced AI applications. In the first minutes of the keynote, Sam Altman highlighted that 4 million developers are now using OpenAI’s platform, with more than 800 million weekly ChatGPT users. They also process over 6 billion API requests every minute.

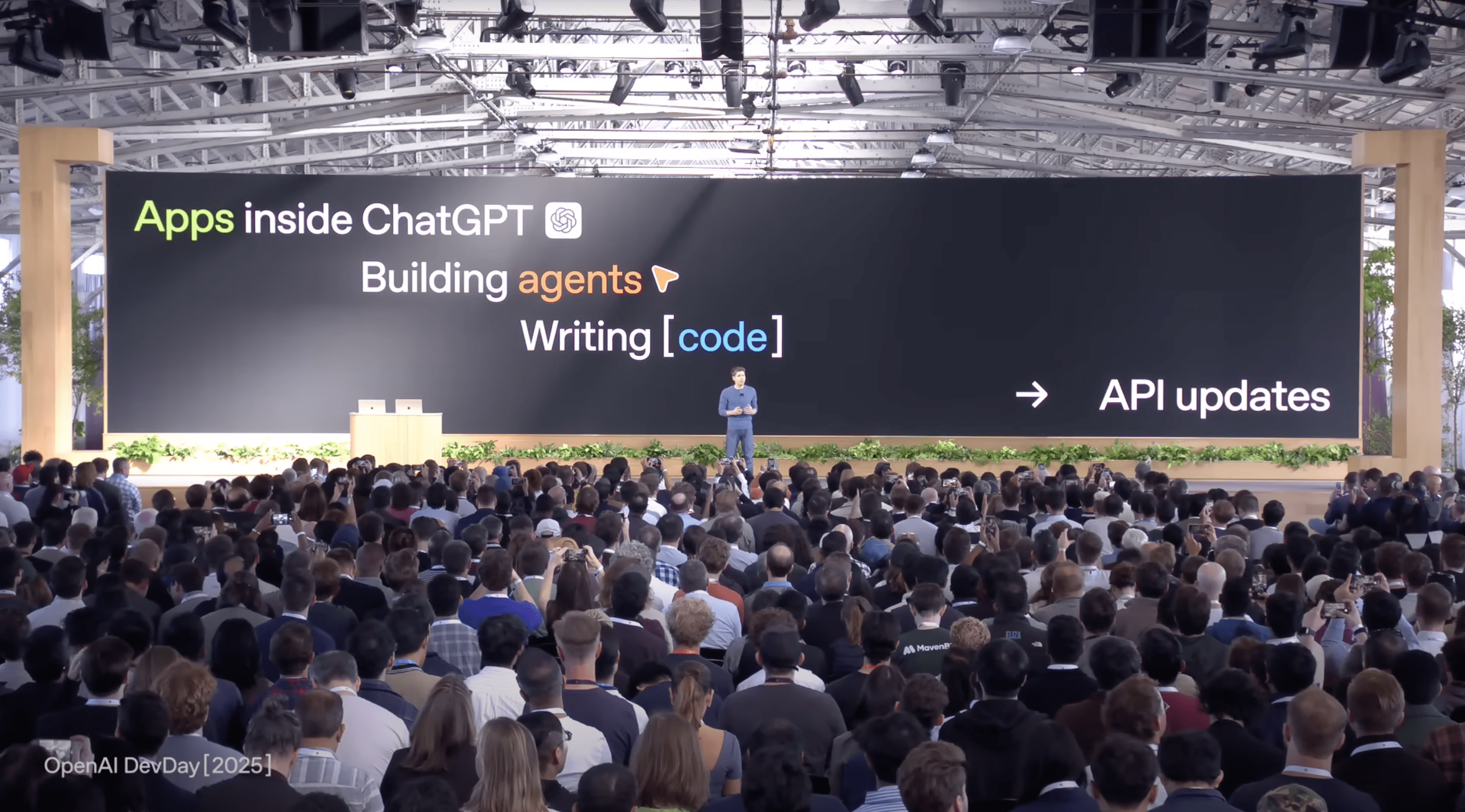

The start of the keynote Sam Altman showed four main themes they are focusing on so developers have a easier time building with AI:

- Apps inside ChatGPT

- Easier to build AI agents

- Writing code with AI

- Updates to OpenAI’s API

Apps Inside ChatGPT using AppsSDK #

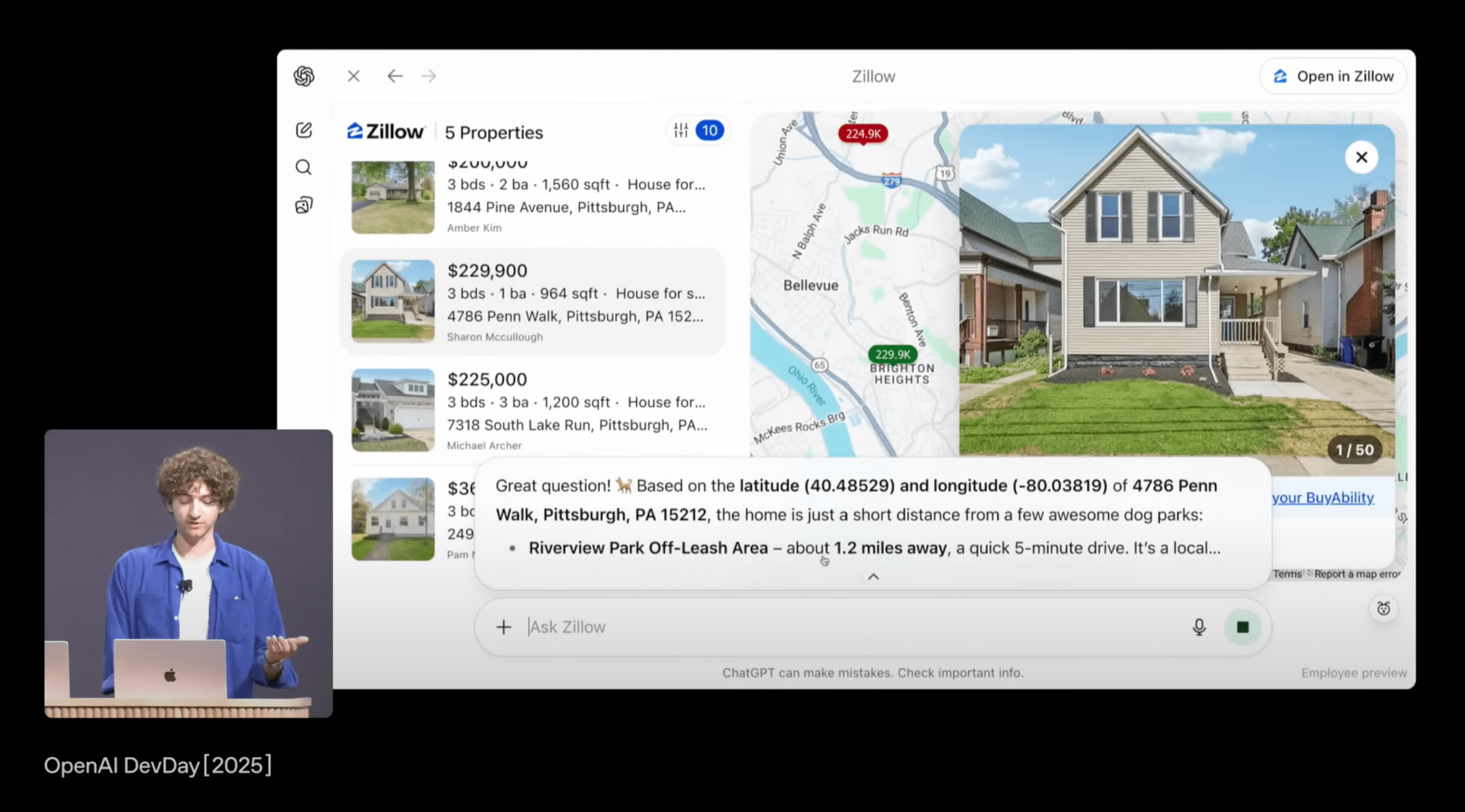

Sam Altman announced AppsSDK, a new framework that allows developers to build applications that run directly inside ChatGPT. Developers can connect their apps’ data, trigger actions, and create custom interactive user interfaces within ChatGPT. AppsSDK is developed on top of MCP(Model Context Protocol). OpenAI has published the standards for developers can easily integrate their apps with ChatGPT. This will allow developers to reach the 100 of millions of ChatGPT users.

If a user is subscribed to your app, they can login right from the conversation. OpenAI also plans to launch many ways to monetize apps in ChatGPT, including new agentic commerce protocol.

Sam Altman demonstrated a how Figma app works inside ChatGPT. The user upload a sketch of a diagram and then ask ChatGPT to create a more polished version using Figma. The user can then make edits to the diagram directly inside ChatGPT using Figma’s tools.

ChatGPT can also suggest apps to users based on the context of their conversation. For example, if a user is planning a music playlist, ChatGPT might suggest using Spotify app to create and manage the playlist.

AppsSDK provides an API to expose context back to ChatGPT from your app. This allows ChatGPT to understand what the user is doing in your app and provide relevant suggestions or assistance.

There are some launching partners for AppsSDK including Expedia, Instacart, Booking.com, Canva, and more. Users can try out these apps in ChatGPT starting today. More apps will be added in the coming weeks. The AppsSDK is available in preview for developers right now. Later this year, developers will be able to submit their apps for review to be published in ChatGPT. There will be a directory of apps available in ChatGPT as well for users to discover and try out.

Easier to Build AI Agents with AgentKit #

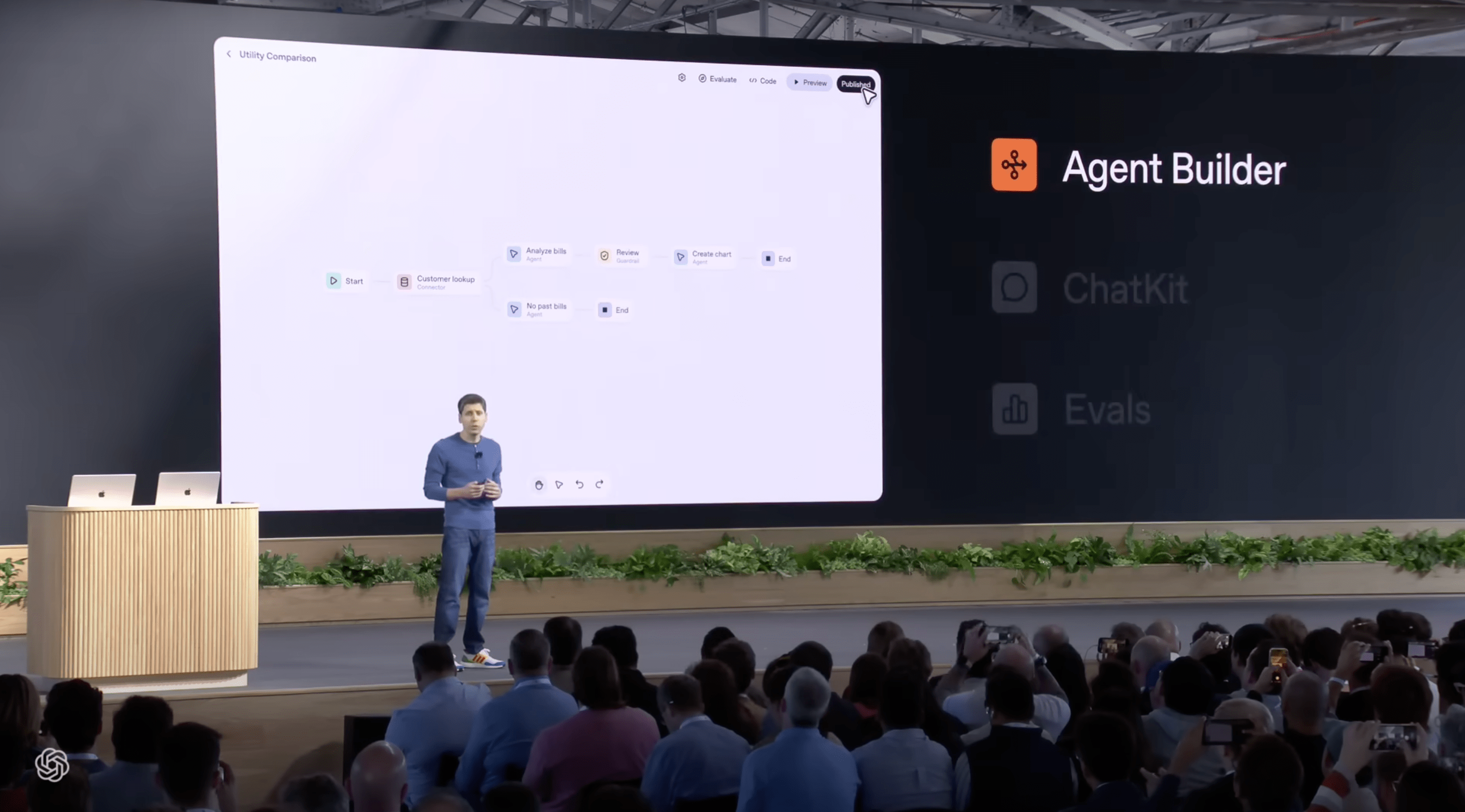

Sam Altman announced AgentKit, a new OpenAI platform that designed to help you take AI agents from prototype to production. It’s everything a developer needs to build, deploy, and optimize agentic workflows with way less friction. There are multiple core components of AgentKit.

Agent Builder #

The first core component of AgentKit is the “Agent Builder”, it’s a no-code visual interface to build agents. It’s a fast way to design logic steps, test the flows and ship ideas. It’s built on top of already famous Response API.

ChatKit #

Using ChatKit, developers can create custom chat experiences with thier own branding, UI, functionality and whatever makes your product unique.

Evals for Agents #

The third core component of AgentKit is “Evals for Agents”. OpenAI is shipping new features dedicated to measuring the performance of agents. Developers can access trace grading to help you understand agent decision step by step. Developers also get data sets so they can assess individual agent nodes. They also provide automatic prompt optimization.

Connector Registry #

Using Connector Registry, developers can securely connect agents to your internal tools and third party systems through an admin control panel while keeping everything safe and under your control.

AgentKit is available for developers starting.

Writing Code with AI #

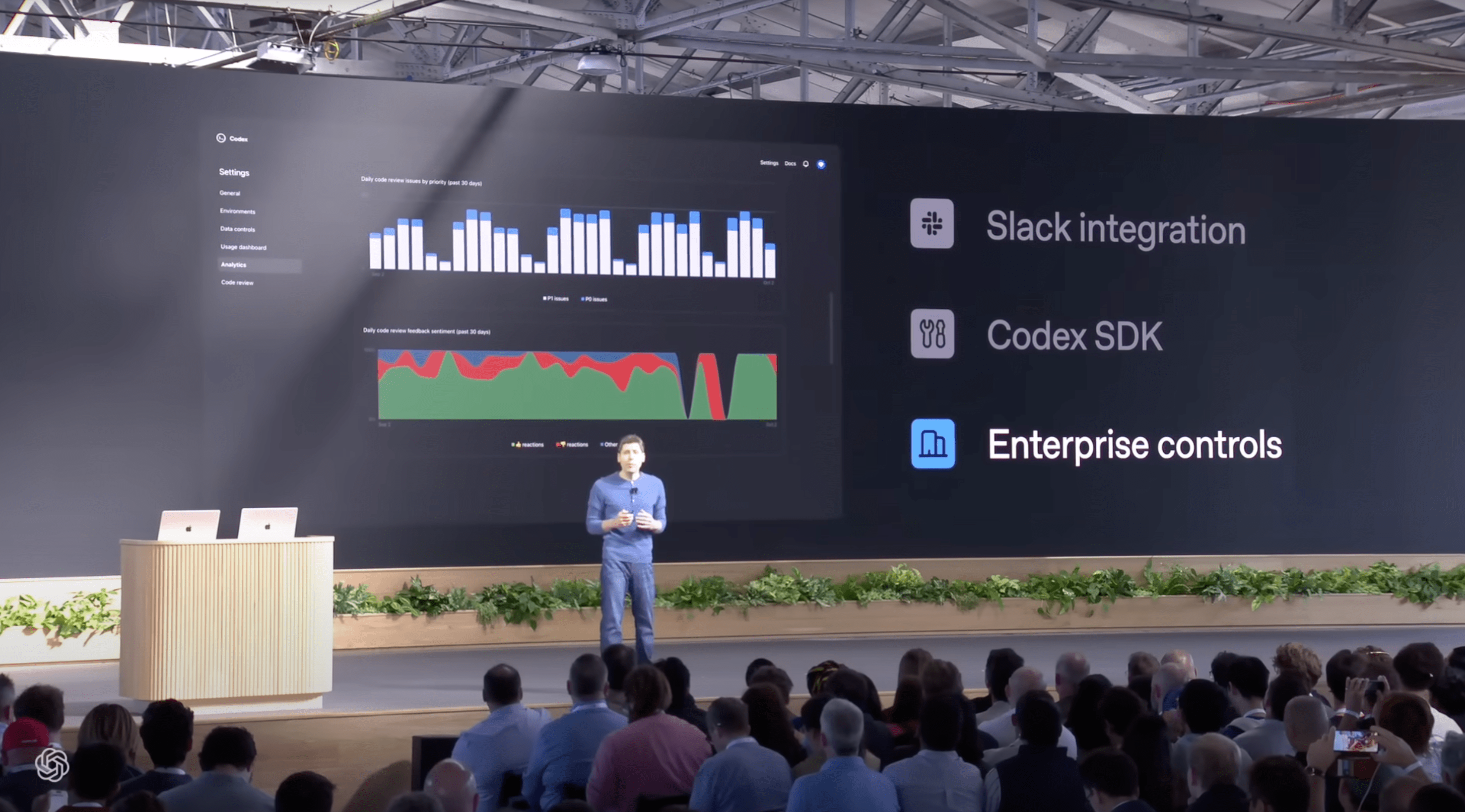

Sam Altman talked about “Codex”, OpenAI’s coding agent that helps developers write, understand, and debug code. Codex is now integrated into popular IDEs like VS Code, JetBrains, and Neovim, also available in GitHub and terminal. Altman announced that Codex usage is gone up really fast. Since early August, daily messages are up 10x across all platforms. This rapid usage has also helped Codex to serve more than 40 trillion tokens since its release.

Altman also announced that Codex is now out of research preview and generally available for all developers. OpenAI introduced three new features for Codex:

- Slack integration

- Codex SDK

- Enterprise controls

Slack Integration #

Developers can now use Codex directly within Slack. This allows developers to get code suggestions, generate snippets, and even debug code right from their Slack workspace.

Codex SDK #

Developers can now extend and automate Codex in their own workflows

Enterprise Controls #

For enterprise customers, OpenAI is introducing new controls to manage and monitor Codex usage. This includes features like environment controls, monitoring, and analytic dashboards and more.

Updates to OpenAI’s API #

Sam Altman announced that GPT-5 Pro is now available in the API. GPT-5 Pro is great for assisting with really hard tasks. Domains like finance, legal, healthcare, and much more where you need high accuracy and depth of reasoning.

OpenAI is also releasing a new voice model called “gpt-realtime-mini”. It’s a smaller, 70% cheaper version of advanced voice model that they announced two months ago. The new model is going to have the same voice quality and expressiveness as the larger model.

Altman also announced that OpenAI is releasing a preview of “Sora 2” in the API. Developers can now access to the same model that powers Sora 2’s stunning video outputs right in their applications. API users can customize video length, aspect ratio, resolution, and easily remix videos.

You can see the full keynote below: